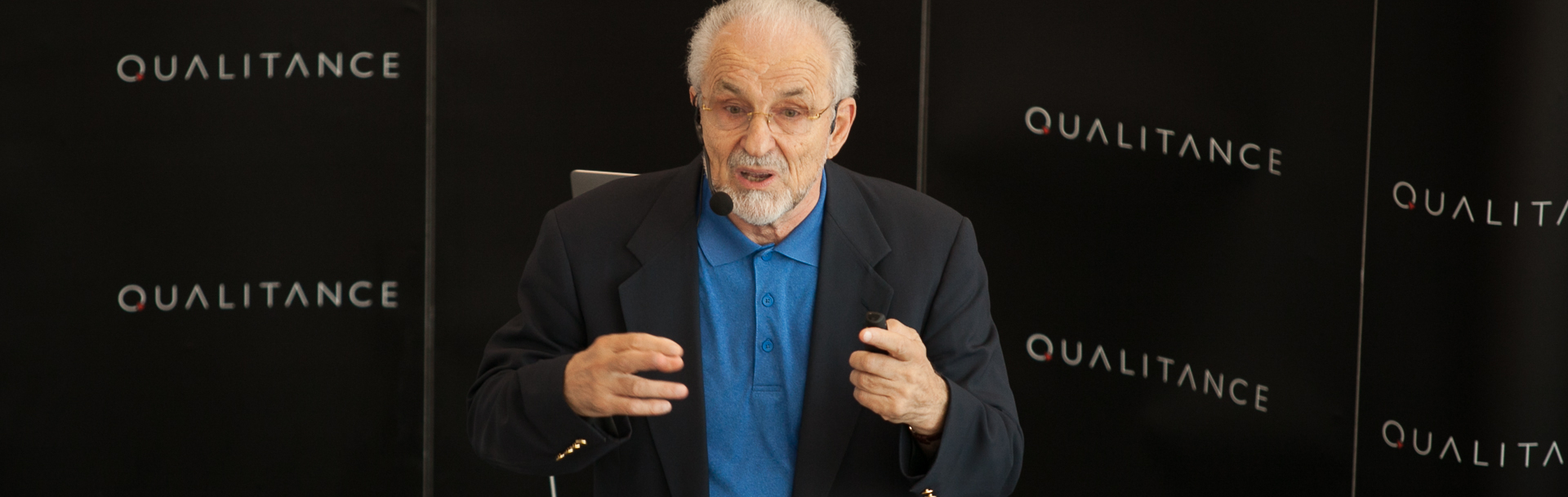

Last week’s FUTURE HORIZONS event was about Philosophical Considerations in Artificial Intelligence, and professor Dan Cautis rocked the audience with eye-opening insights – it’s one of his many talents.

There was a lot of talk on transhumanism, the dynamics between causation and correlation, Bayesian networks, and plenty of algorithms and statistics concepts. That’s something you don’t get to easily hear and somewhat understand (I’m speaking for myself). Yet, Professor Cautis places them in the story of man trying to outpass his own limits, to increase his power and reach higher than the gods, since Prometheus to this day. And naturally a lot of complex things start to make sense (watch the video of the talk, you’ll see what I mean).

Before we get to Professor Dan Cautis answering a couple of burning questions from our online audience, here are a couple of ideas that stayed with me even long after the talk.

Man a/o The Machine. The inspiration of transhumanism.

Sliding through centuries where man constantly reinvented purpose was enlightening. With Artificial Intelligence coming in the story, we got to ponder on science and/or religion. Some might consider that man is reaching a sort of divine level, since he’s replicating himself in the machine. Others might even say that man is creating the machine after his own image.

Regardless, all will ask pretty much the same questions: Will the machine be as intelligent as man? More intelligent? Will the machine be conscious? Will the machine be able to build (its own) consciousness? These questions are the seeds of alternative theories, which brings me to the core of the talk – the philosophical component in Artificial Intelligence.

W/O Correlation, causation, semantics and syntax

Among many compelling topics, the talk brought to the surface what scientists and computer scientists are missing out on by acting like the coolest kids in town, ignoring what philosophers might have to add. Would it be wrong to say that, from this perspective, philosophers are to computer scientists what semantics is to syntax? And isn’t it odd that computers only do syntax? They don’t do semantics, not yet.

However, I bet we’d be able to solve a lot of problems if correlation told us the cause, and/or if we could measure it. But it turns out we cannot measure causation. We can only measure correlation. Suddenly, hearing professor say Google is making billions by ignoring causation made me think of this internet titan in an unexpected light.

Watch out for the HYPE! Mind the confusion.

Let’s face it, Artificial Intelligence is one of the rock stars in the world of technology and beyond. There’s so much hype around AI, that even people who don’t know much about it, somewhat feel its ever growing presence.

It makes sense to be in awe of what you don’t understand. But when you understand more than the average, because you’re a scientist or a computer scientist, you need to pause and examine the hype through as many filters as possible. You can apply the filters of philosophers and other specialists and scrutinize all the layers they create in Artificial Intelligence, or in any other emerging technology. This is what Professor Cautis advises.

Q & A with Professor Dan Cautis

It’s obvious from my writing that I have a lot of questions. So did our audience, both in the Q & A session and online. Because some of them were left unanswered, professor Dan Cautis gladly addressed them here.

#1 Do you see consciousness as a variable property of a system, growing proportionally with its computing power or rather as a Boolean property related to some other quality of the system?

Neither. To properly develop a good understanding of consciousness, it is necessary to have mature, agreed upon metaphysics in explaining reality. At one point, there was one metaphysics developed by scholastics, I am referring to the Aristotelian-Thomistic (A-T), but it was rejected by the Cartesian-Galilean-Newtonian (CGN) mechanistic metaphysics of modern science.

The A-T metaphysics was fairly well developed and consistent, but it is unacceptable in modern (science-based) times because it relies on immaterial properties of the spirit (consciousness?) that is accepted in classical theism. The modern CGN metaphysics is mechanistic, radical-reductionist and does not seem to have a good path of explaining the emergence of consciousness. It seems fairly clear to me that, without progress (and consensus) in improving this reductionist metaphysics, it will be hard (maybe impossible?) to explain consciousness. See Thomas Nagel’s “Mind and Cosmos” for a better explanation of the quandary.

#2 What role does quantum computing have in finding causality?

Right now it is not much. Quantum computing developers seems to be busy creating a real working device (I am using the “device” term as a general term for a working, proven system that is reliable and repeatable). Although there are many theoretical promises for quantum computing, right now, the researchers are still working hard to mature the technology such that many people will use it and see how they can create remarkable applications.

Although the promise is still there, the maturity of it is not there yet; give it few more decades and then we will know better.

#3 What is your take on the technological singularity?

I believe that the technological singularity is a very interesting speculation based on some good observations on technological developments (like Kurzweil’s law of accelerated returns). Although Kurzweil’s observations are very interesting and follow a number of recent developments in technology progress; this is not a “nature’s law” like gravity or the stoichiometric property of chemical reactions.

In other words, it is not inevitable: it may happen or not and it definitely does not seem imminent as far as having conscious superintelligent machines soon when it is not clear yet how to achieve Artificial General Intelligence yet (not even talking about Artificial Super-Intelligence). However, it is not to be ignored the real danger of us (humans) developing so many extremely complex systems that are already close to (or beyond) our capacity to fully understand and/or predict.

Extreme complexity is very dangerous (because it is hard to comprehend and control its unfathomable failure modes) and does not need to achieve the level of super intelligence to be able to create serious dangers in our society.

#4 At some point, you said that humans want to augment their cognitive powers beyond what the mind-brain of today can do. I couldn’t agree more. The progress in Artificial Intelligence so far tells us we’re getting better and better at simulating intelligence in machines. But do you think we’ll be able to simulate consciousness too?

As far as I understand, the studies trying to understand and simulate the consciousness (that are fairly simplistic now), I don’t believe it will be possible to simulate it within the next few decades or even longer. Wouldn’t we be able to simulate it forever? This is a very hard question and I don’t have an answer for it.

However, based on the fact that right now we don’t have much of an idea of what consciousness is, I don’t think that sort of a major breakthrough in neuroscience is possible.

You might also want to watch:

Future Horizons in Review - 5 Tech Conversations That Ruled in 2018

Posted at 18:03h, 14 January[…] a synopsis of the talk, check out this blog post. If you need a richer context, you might want to delve into this short history of Artificial […]

[Episode 1] Artificial Intelligence from Hype to Reality

Posted at 13:05h, 14 July[…] mainly to gain more public attention. The consequence is that the same technological and philosophical assumptions fueling the hype are equally preventing us from making sensible judgments on Artificial […]